Soldier Fight is a multiplayer 2D arena shooter inspired by Soldat. The player's goal is to out-shoot and out-maneuver their opponents to reach the highest score.

Soldier Fight features a client-server networking model written from the ground up in C++ using Godot for rendering. I go into the details of my implementation below:

- High Level Walkthrough

- Sockets

- Serialization and Bitpacking

- Replication

- Delta Compression and Entropy Coding

- Network Simulation

- Reliable UDP

- Client-Side Prediction

- Steam Lobbies and Matchmaking

- Areas of Improvement

The networking is broken down into the following systems

- Sockets: Abstractions over Berkeley socket api

- Serialization: Writing and reading data into streams

- Network Management: Tracks connections and network objects

- Replication: Tracks the state of each network object and responsible for replicating object state to each client

- Reliable UDP: Ensures UDP packets arrive at their destination even if the packet is dropped.

- Game Services: Integration point for services such as Steamworks or Epic Online Services

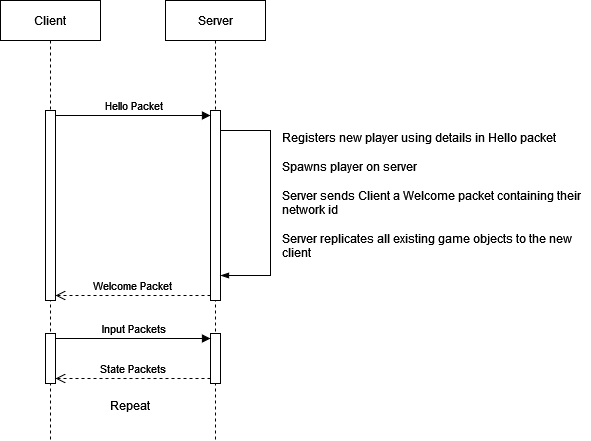

To help visualize some of the systems, here's a high level walkthrough of when a client first connects to a server:

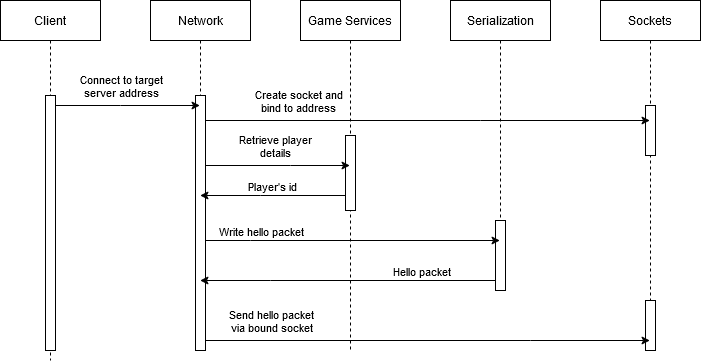

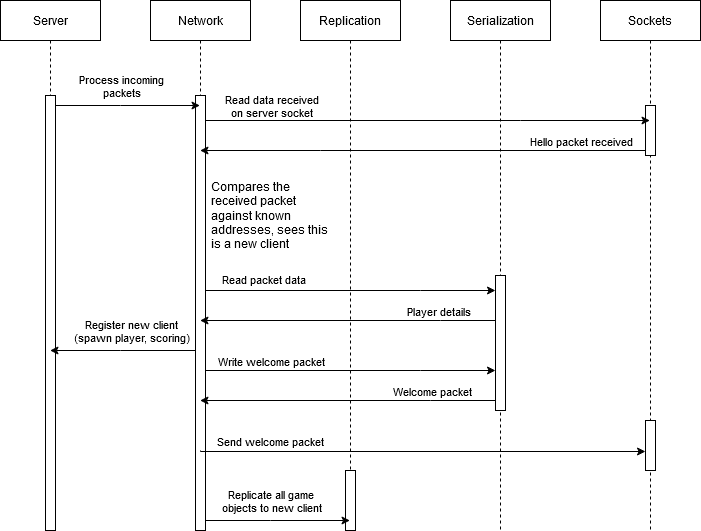

Reality is a bit more complicated so let's take a closer look at the client and server:

Client Sends a Hello Packet

Server receives a Hello packet and welcomes the new client

The Sockets module provides a light abstraction over the Berkeley sockets api. Let's compare creating a UDP socket using Winsock and the Sockets module:

SOCKET UDPSocket = socket(AF_INET, SOCK_DGRAM, IPPROTO_UDP);

sockaddr_in Address;

memset(&Address, 0, sizeof(Address));

Address.sin_family = AF_INET;

Address.sin_addr.s_addr = htonl(INADDR_ANY);

Address.sin_port = htons(Port);

bind(UDPSocket, reinterpret_cast<sockaddr*>(&Address), sizeof(Address));

Creating a UDP Socket with Winsock

mSocket = CreateUDPSocket(INET);

SocketAddress Address(INADDR_ANY, Port);

mSocket->Bind(Address);

Using the abstraction

The Serialization module handles reading and writing to streams while providing a standard interface:

// Writing data

BitStream Packet;

Packet.Write(15);

Packet.Write("Player 1");

Packet.Write(Vector3<int>{ 0, 0, 0 });

// Reading data

int Health;

Packet.Read(Health);

std::string Name;

Packet.Read(Name);

Vector3<int> Position;

Packet.Read(Position);

Simultaneously, it performs bitpacking to minimize the amount of data stored in each packet so the packet sizes can be kept below the MTU limit of roughly 1470 bytes with ethernet. For instance: say we have a 1 byte boolean representing if a player is alive or not. Since a bool is either 0 or 1, 7 of the bits are wasted if we write it as-is into the packet.

By tracking the current bit index in a byte (described here in mBitHead) we know how much space is left in each byte when writing to a packet, allowing the module to write

the data to mBuffer efficiently.

void WriteBits(uint8_t Data, size_t BitCount)

{

uint32_t NextBitHead = mBitHead + static_cast<uint32_t>(BitCount);

// If the buffer is too small, expand it

if (NextBitHead > mBitCapacity)

{

ReallocBuffer(std::max(mBitCapacity * 2, NextBitHead));

}

// First, the mBitHead, which represents the index of the next bit in the stream to

// be written, is decomposed into a byte index and a bit index within that byte.

// Because a byte is 8 bits, the byte index can always be found by dividing by 8,

// which is the same as shifting right by 3. Similarly, the index of the bit within

// that byte can be found by examining those same 3 bits that were shifted away in

// the previous step. Because 0x7 is 111 in binary, bitwise ANDing the mBitHead with

// 0x7 yields just the 3 bits.

uint32_t ByteOffset = mBitHead >> 3;

uint32_t BitOffset = mBitHead & 0x7;

// Using the ByteOffset as an index into mBuffer array, we find the target byte

// Then we shift the data left by the bit offset and bitwise OR it into the target

// byte.

uint8_t CurrentMask = ~(0xff << BitOffset);

mBuffer[ByteOffset] = (mBuffer[ByteOffset] & CurrentMask) | (Data << BitOffset);

// Calculate how many bits were not yet used in our target byte in the buffer

uint32_t BitsFreeThisByte = 8 - BitOffset;

// If we needed more space than that, carry to the next byte

if (BitsFreeThisByte < BitCount)

{

// To calculate what to OR into the next byte, the method shifts the data we are

// serializing right by the number of bits that were free.

mBuffer[ByteOffset + 1] = Data >> BitsFreeThisByte;

}

mBitHead = NextBitHead;

}

The Replication module is responsible for keeping clients in sync with the server's game state. Each game object can have one of three replication states:

- Create: The client needs to create the object

- Update: The client needs to update the object

- Destroy: The client needs to destroy the object

The module also tracks whether each object has changed since the last network update (see Delta Compression for why). When the server is ready to send the game state to a client, the Replication module loops through each game object checking if the object has changed, and if so write the object's current replication state and any data necessary to perform the action:

// A map of NetworkIDs to ReplicatedGameObjects

for (auto& Pair : mReplicatedGameObjects)

{

ReplicatedObject& Rep = Pair.second;

if (Rep.HasDirtyState())

{

int NetworkId = Pair.first;

Packet.Write(NetworkId);

ReplicationState State = Rep.GetState();

Packet.Write(State);

uint32_t DirtyState = Rep.GetDirtyState();

uint32_t WrittenState = ReplicateObjectState(Packet, NetworkId, DirtyState)

Rep.ClearDirtyState(WrittenState);

}

}

Given the scale of Soldier Fight, all objects in a match are considered in the replication check and potentially sent to each client. If the game was scaled out further to handle massive arenas, then it might be necessary to implement relevancy such as view frustum culling or a hierarchical culling technique like binary space partitions.

To borrow the definition from Source Multiplayer Networking, Delta Compression means the server doesn't send a full world snapshot each time, but rather only changes (a delta snapshot) that happened since the last acknowledged update.

Building off the Replication section above, when the server processes a player's input (e.g. moving, jumping, and shooting) the Replication module is notified to set the state of the player's game object to dirty. This allows the server to skip over any Game Objects that have not changed since the last update, reducing packet size. Each object also tracks what attributes have changed since the last update so only changes in data are written to the packet, preventing unnecessary writes.

Entropy Coding is another compression technique used. Say we are serializing a player's position (a vector of 3 floats aka 12 bytes) in a 3D world:

Packet.Write(mPosition.x);

Packet.Write(mPosition.y);

Packet.Write(mPosition.z);

This means each time our player moves, we are writing 12 bytes into the packet. If players are almost always on the ground at z = 0 and rarely changing their height position, we are wasting 32 bits for

each player update. If we use a boolean representing if the player's height position has changed, then we can reduce the 32 bits down to 1 bit for most updates (since booleans can

be packed into 1 bit as outlined in Bitpacking above).

Packet.Write(mPosition.x);

Packet.Write(mPosition.y);

if (mPosition.z == 0)

{

Packet.Write(true);

}

else

{

Packet.Write(false);

Packet.Write(mPosition.z);

}

While I used this approach in Soldier Fight, there are other, more sophisticated techniques such as Huffman Coding or Arithmetic Coding depending on the compression needs.

A rather simple (but effective) approach was used for simulating latency and dropped packets:

- Assign a packet a timestamp (plus some amount of time to represent fake latency e.g. 200 ms) on arrival

- Add the packet to a queue (while occasionally tossing out a packet which simulates dropped packets)

- Only process packets in the queue that have a timestamp less than the current server time

This helped test and ensure the Client-Side Prediction and Reliable UDP code was working effectively in local dev conditions.

As Glenn Fiedler writes in TCP vs UDP: TCP connections are reliable and ordered at the cost of having to wait for your packets to arrive and processed in the correct order. UDP has no concept of a connection and no guarantee of reliability or ordering of packets, they may arrive out of order, be duplicated, or not arrive at all. While at first glance, it sounds like UDP is only downsides, the reliability and order requirements of TCP means time spent waiting for packets to arrive in the correct order, resending packets that got dropped, and the computation overhead for this complexity.

For a fast paced game like Soldier Fight, waiting for a dropped packet to be resent by a TCP connection and acknowledged means a player might not get an update until hundreds of milliseconds later, which could mean the difference between winning and losing. Selecting UDP as the protocol was a natural fit. But the lack of reliability meant important packets could be dropped, such as when the server spawns a bullet from a player firing a gun.

To counteract this, a form of Reliable UDP was implemented to gain some of the benefits of TCP with less overhead.

- Host 1 assigns a packet a unique id

- Host 2 receives the packet, the id is read and added to list of ids to acknowledge later

- On the next outbound packet, the Host 2 writes the list of ids received

- Host 1 receives the packet and reads reads the list of ids. This determines whether the packet was received or dropped

- If dropped, Host 1 resends the packet with the latest state

- If we resent the packet unchanged, Host 2 could receive stale data

In Soldier Fight, when a player presses a key to move forward, the client sends the input to the server, the server processes the input and moves the player, and player's updated position is sent in the next packet. While this works in local testing, real world latency meant the player would only start moving 50 ms or more after they pressed the key. This caused the game to feel sluggish as everything was on a slight delay.

To counteract this, on the client we take a peek at the inputs the player is currently making. Then we can simulate the movement locally, updating the player's position.

if (IsLocalPlayer())

{

Move* PendingMove = GetPendingMove();

if (PendingMove)

{

ProcessInput(PendingMove);

SimulateMovement();

}

}

When the next update is received from the server, if there's a difference between the two positions, the client pushes the player towards the server's position over a period of time. The player isn't directly moved to the new position because it would cause a disjointed experience as they keep teleporting to the server's position.

if (GetLocation() != ServerLocation)

{

Push(ServerLocation, 0.1f);

}

While this works great for the local player, their view on other players is always delayed by the amount of latency they are experiencing. With higher latency this means they will always be aiming at where the other player was and not where they are. To counteract this, enemy players have their current movement extrapolated out based on the client's latency. (E.g. if a player has a latency of 100 ms, the enemy players' positions are extrapolated out 100 ms) Then when the next update from the server arrives, the enemies are pushed towards their real position similar to the local player.

To streamline finding matches, Soldier Fight was integrated with Steamworks SDK. When a server starts up, it starts up a lobby on Steam and sets the server details. Then when a client wants to join a game, Steam matchmaking is searched for relevant games returning a list of Soldier Fight lobbies. Upon joining a lobby, the client retrieves the server details and connects to the server.

The downside with this approach is it doesn't take advantage of Steam's NAT traversal. To do so would require swapping out the networking code to rely on Steam's networking implementation instead. While viable, it didn't align with the spirit of the project.

While I am finished working on Soldier Fight for now, there is still some room for improvement before I would consider it production worthy:

- RPCs

- Improve the dev experience when adding new serialization fields (via reflection)

- Server Side Rewind

- NAT punch-through

- Text chat

- Better movement physics